Supervisor: Dr. Albert Ting Leung LEE

Background & Objective

Current Challenges

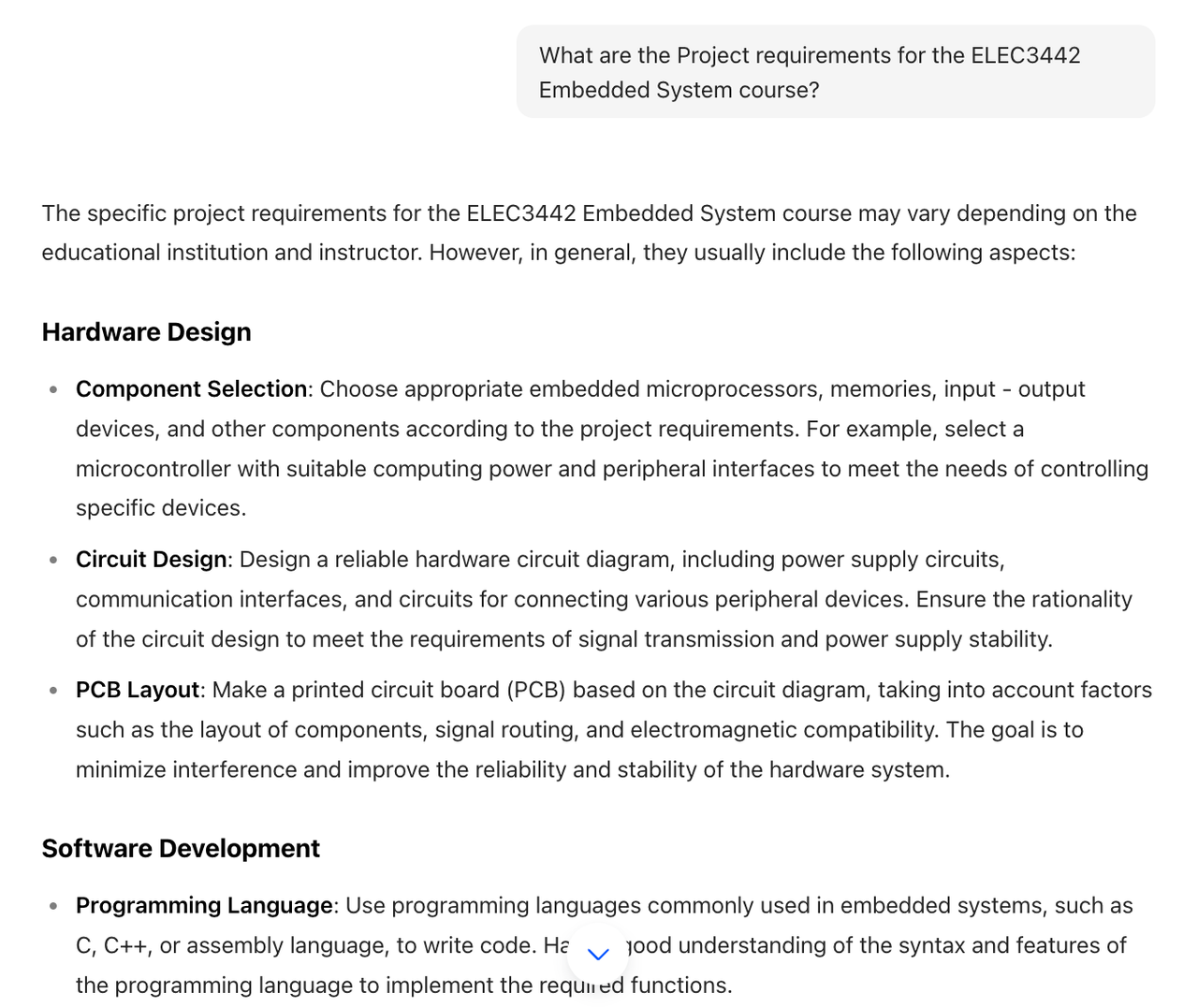

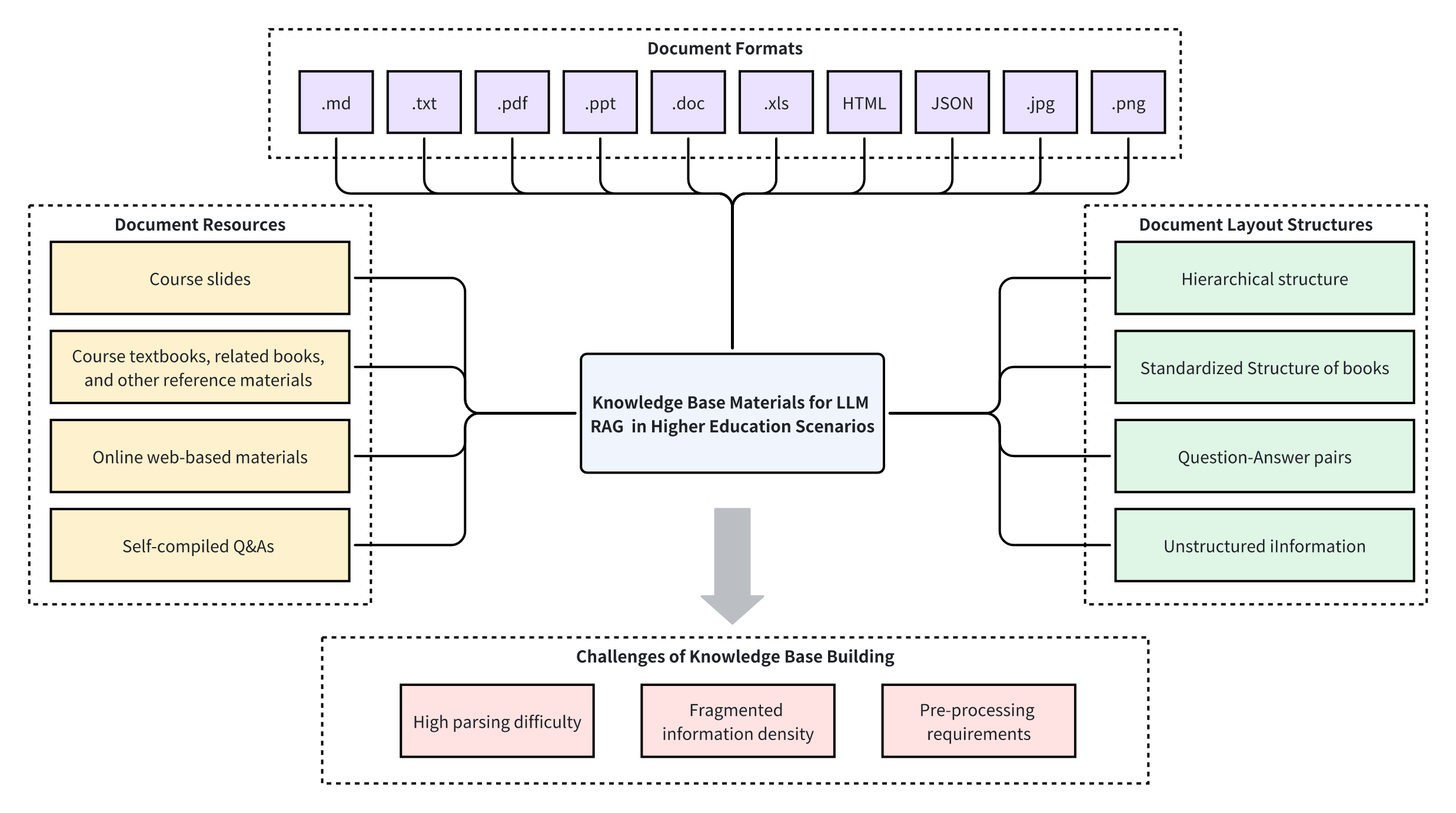

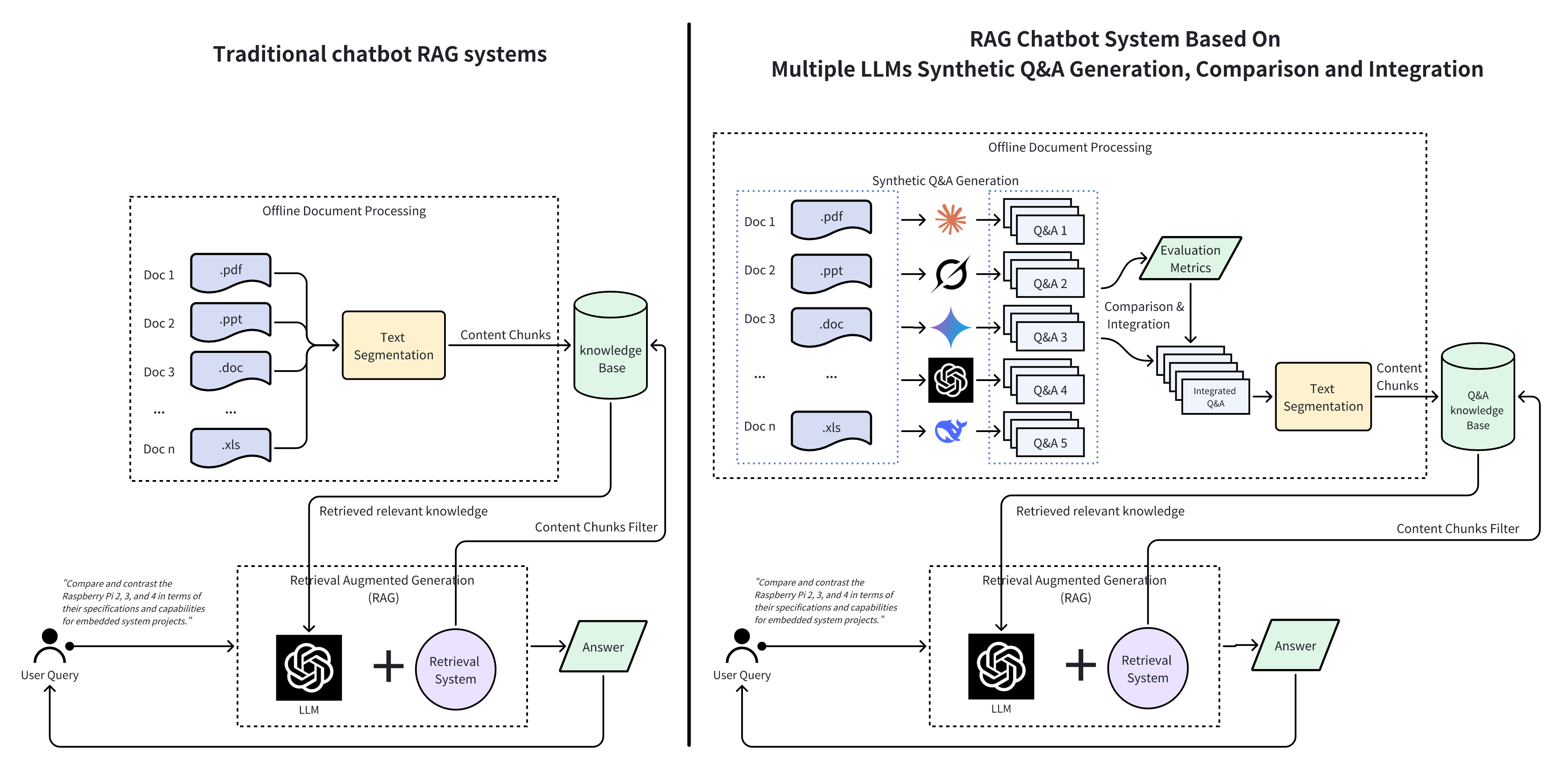

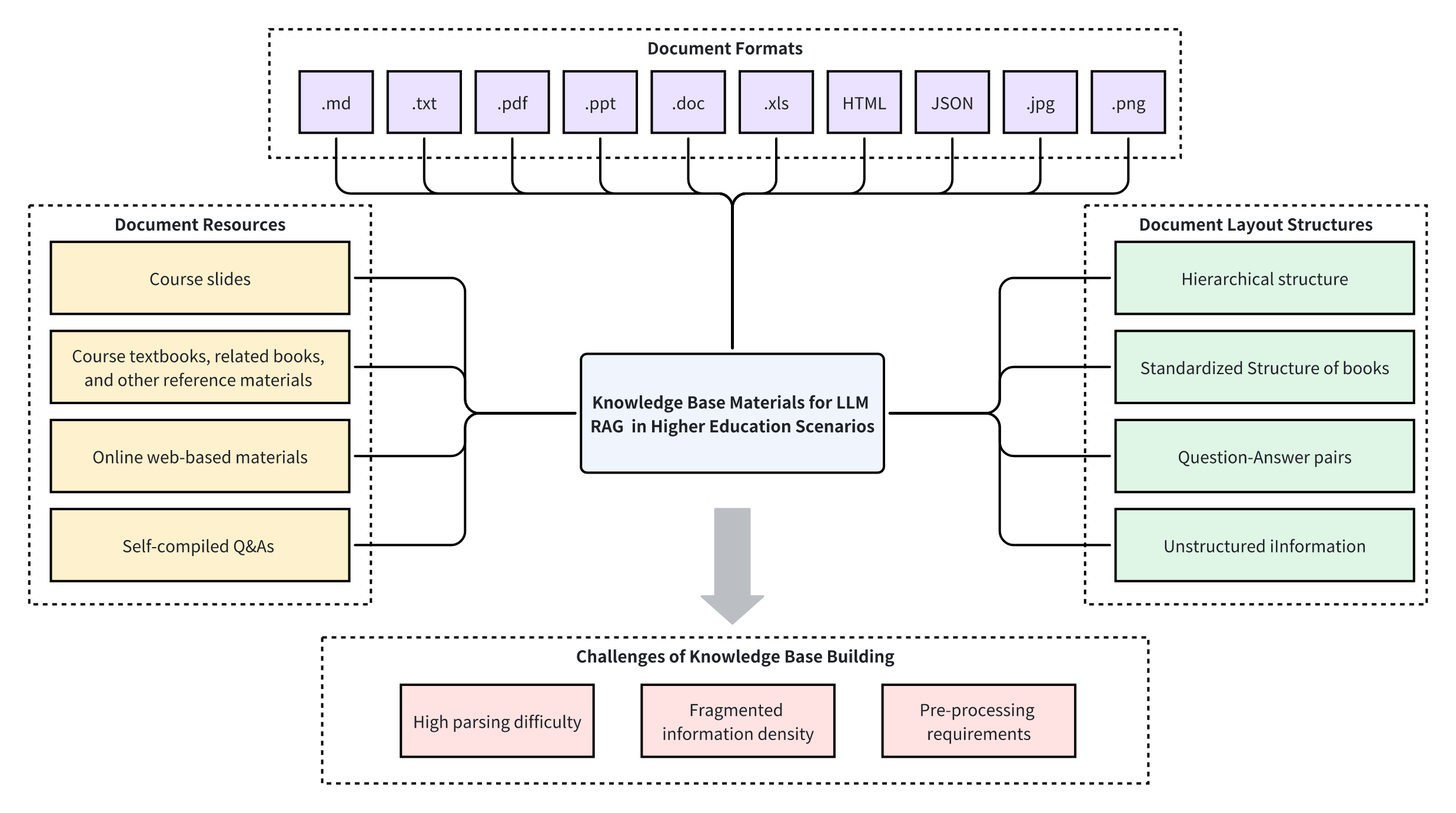

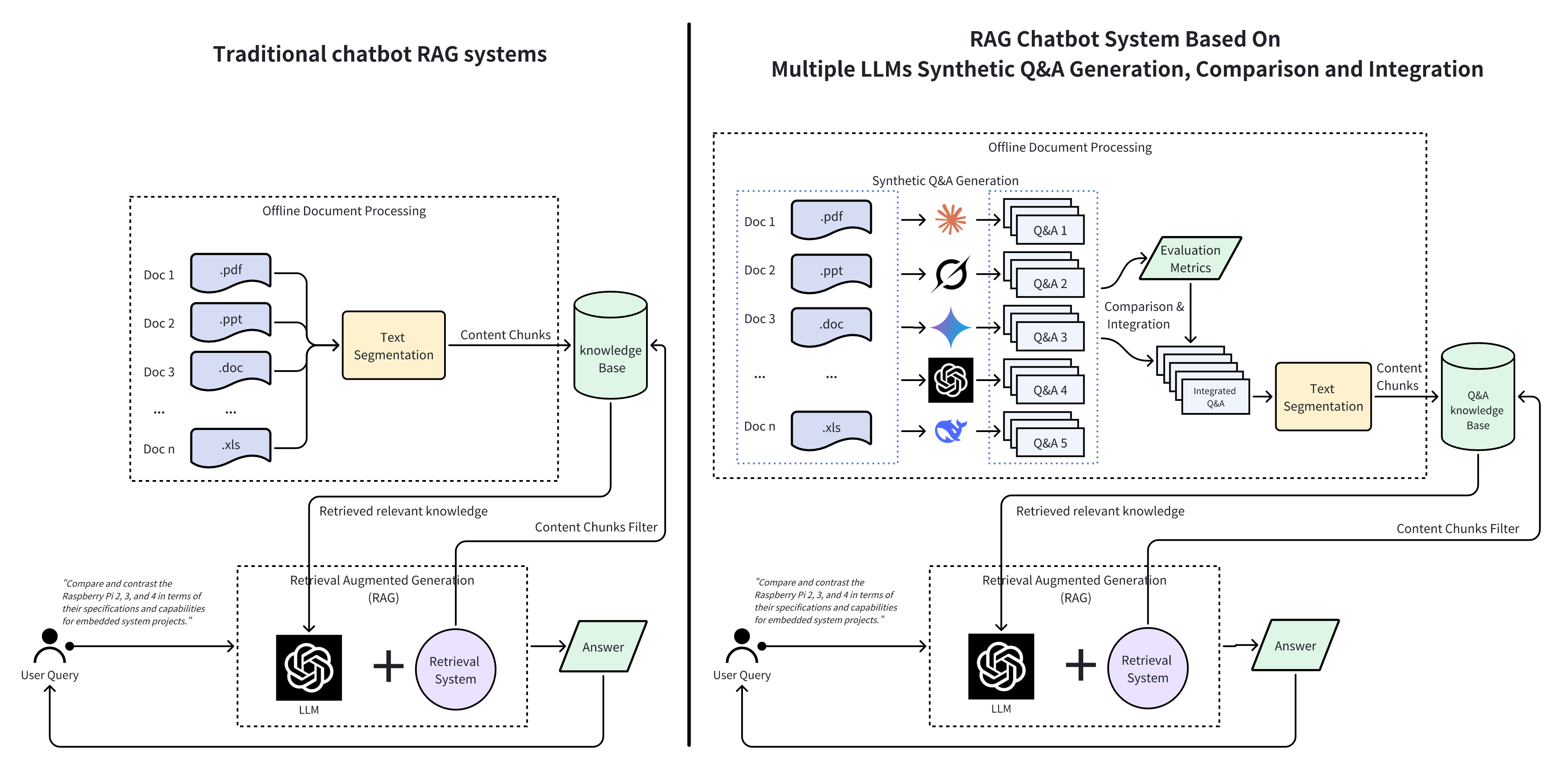

- Traditional RAG systems suffer from heterogeneous document formats and noise interference

- Improper segmentation strategies lead to low retrieval accuracy

- Severe hallucination problems in course-specific knowledge scenarios

- Limited effectiveness when handling proprietary educational content

Research Objectives

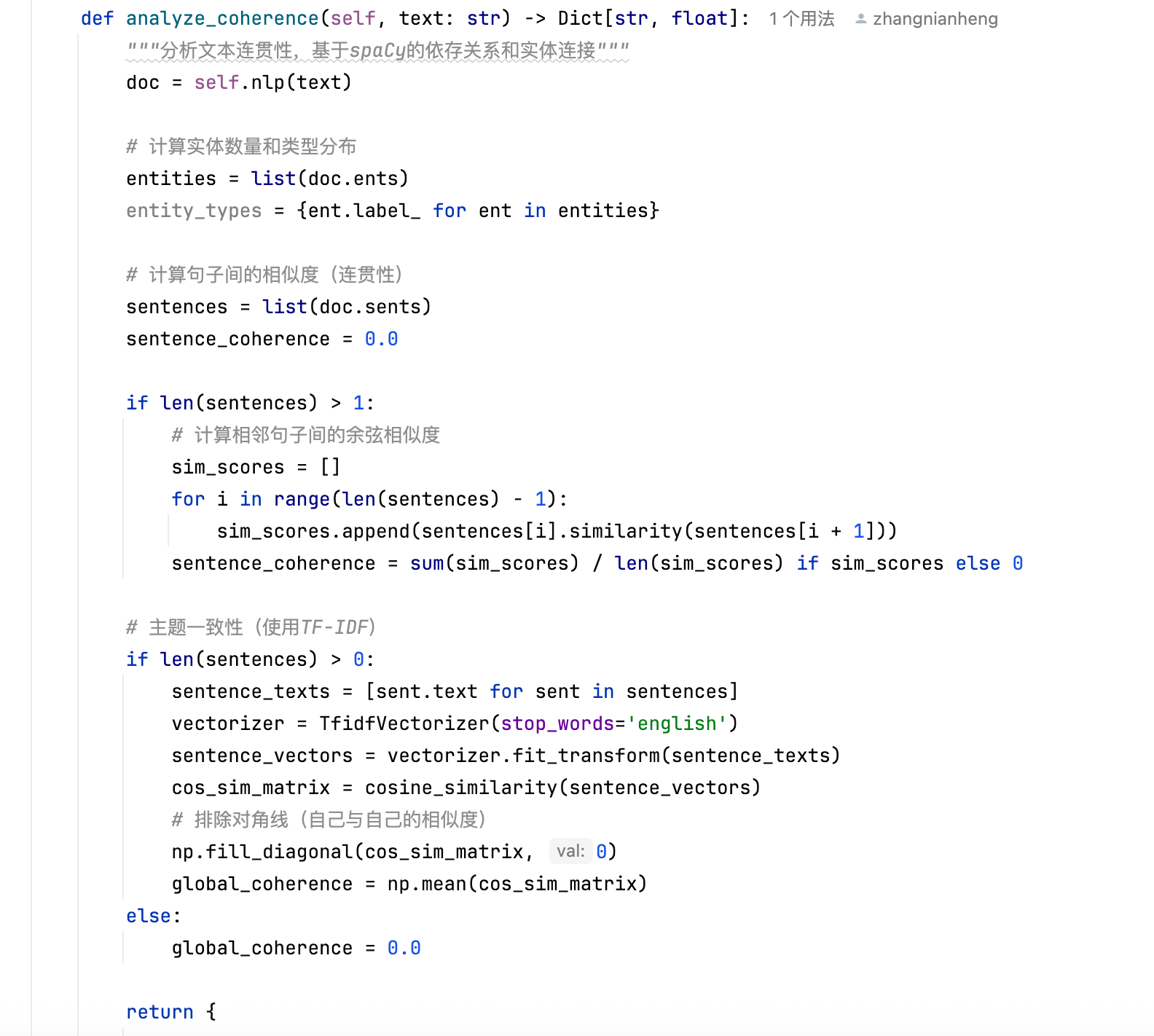

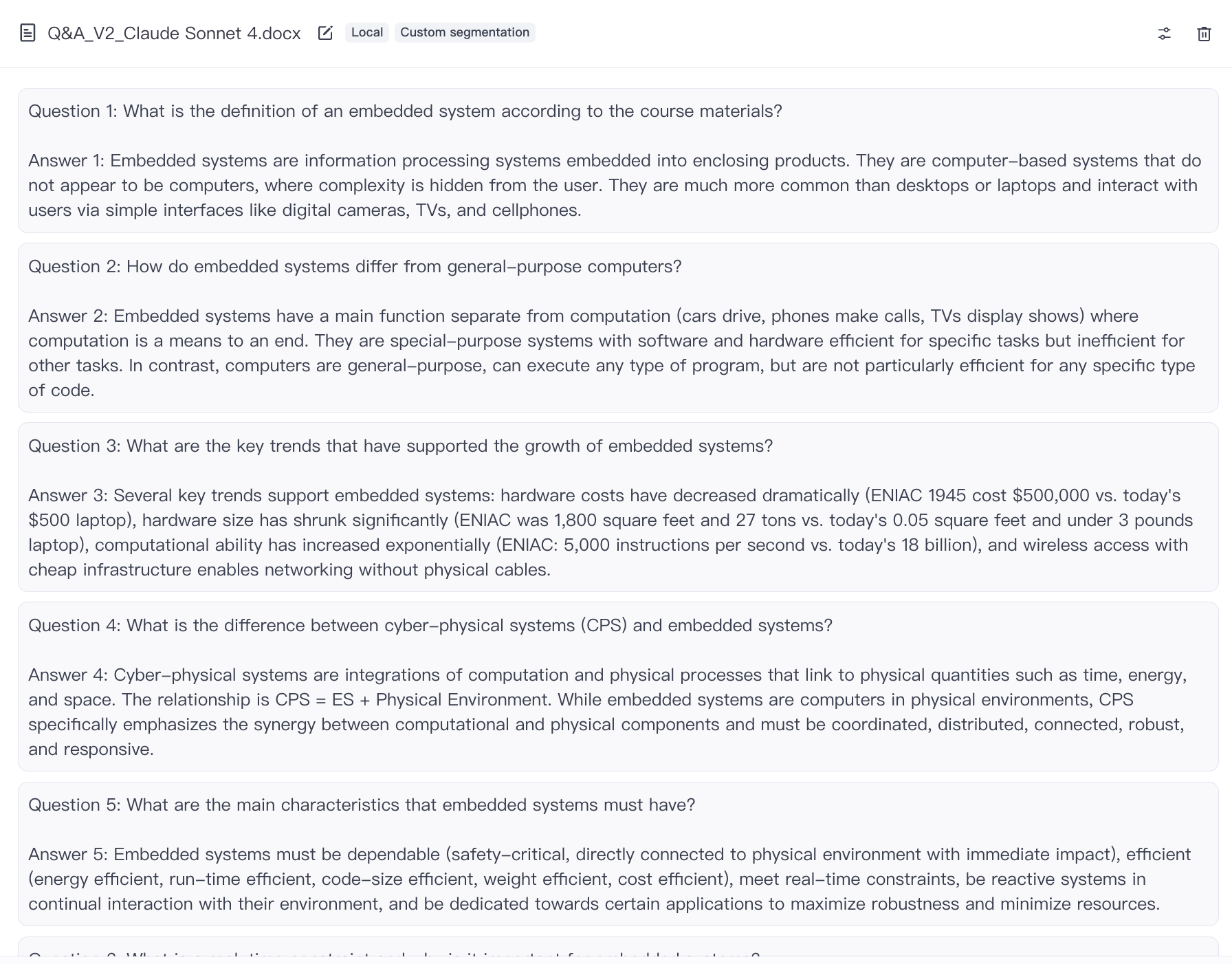

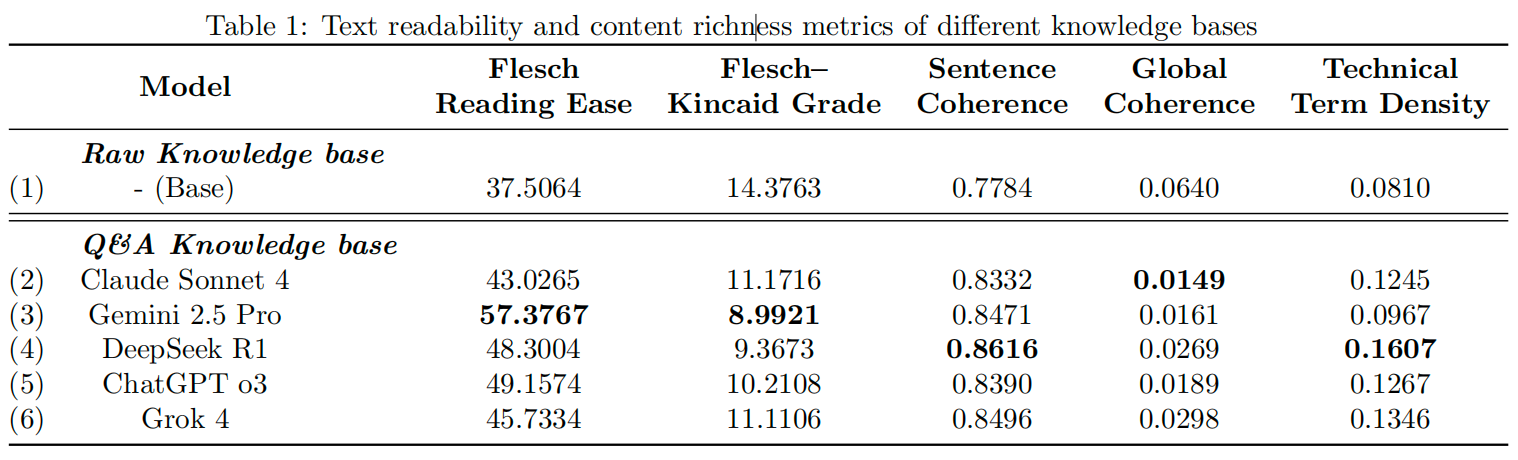

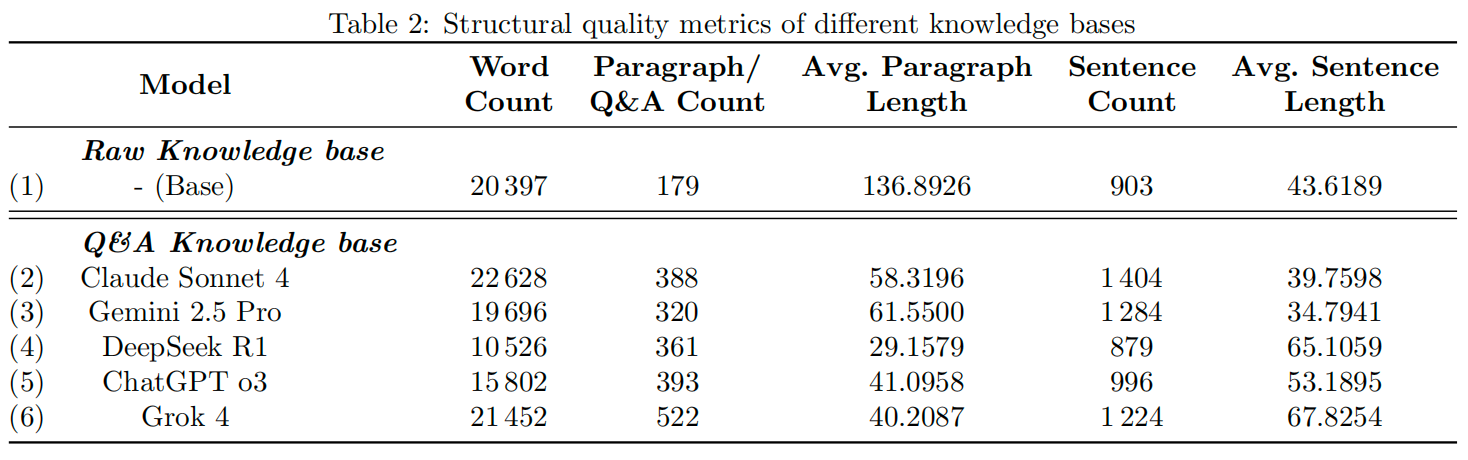

- Construct high-quality course-specific knowledge bases

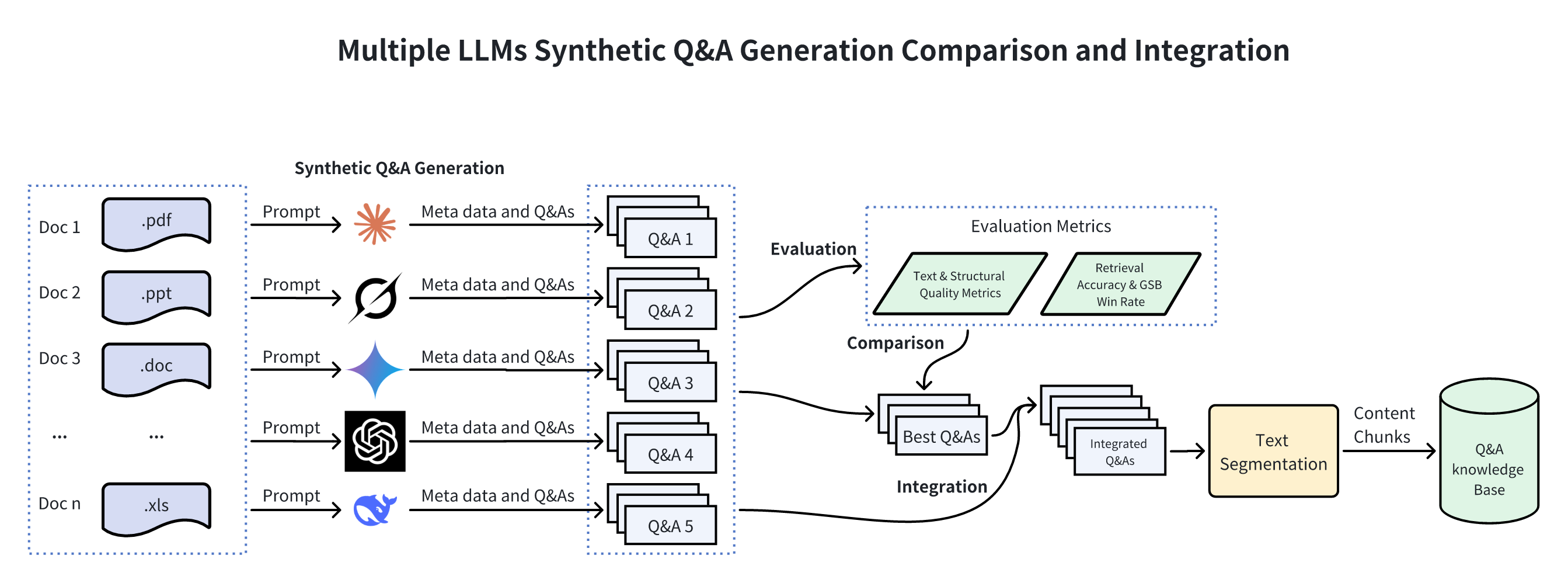

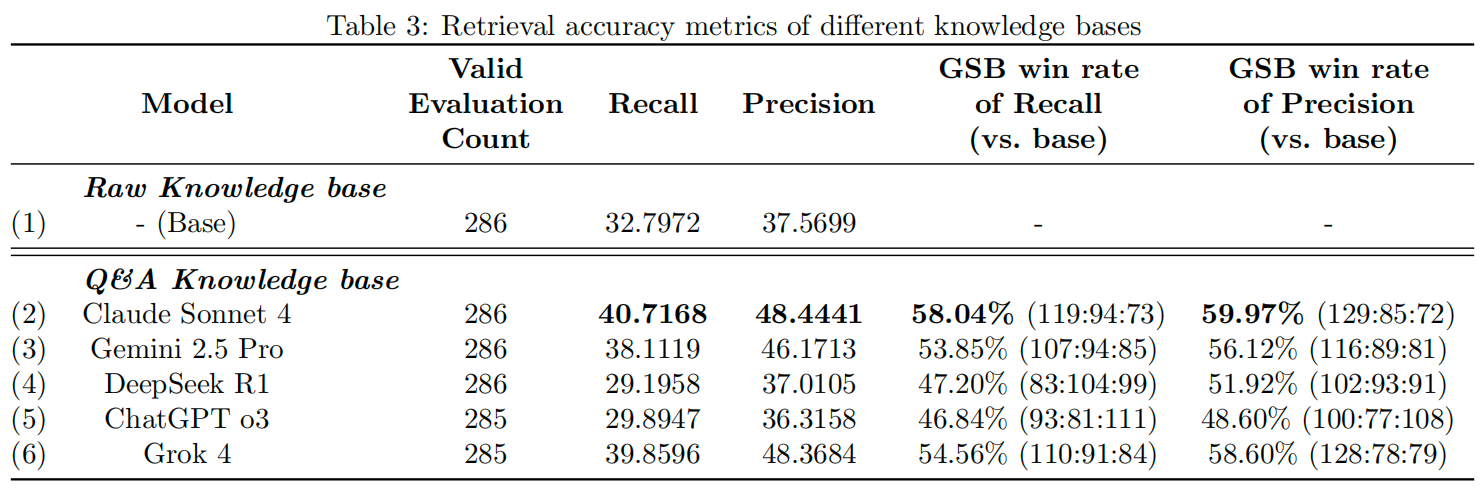

- Generate structured Q&A knowledge bases through multi-model collaborative knowledge distillation

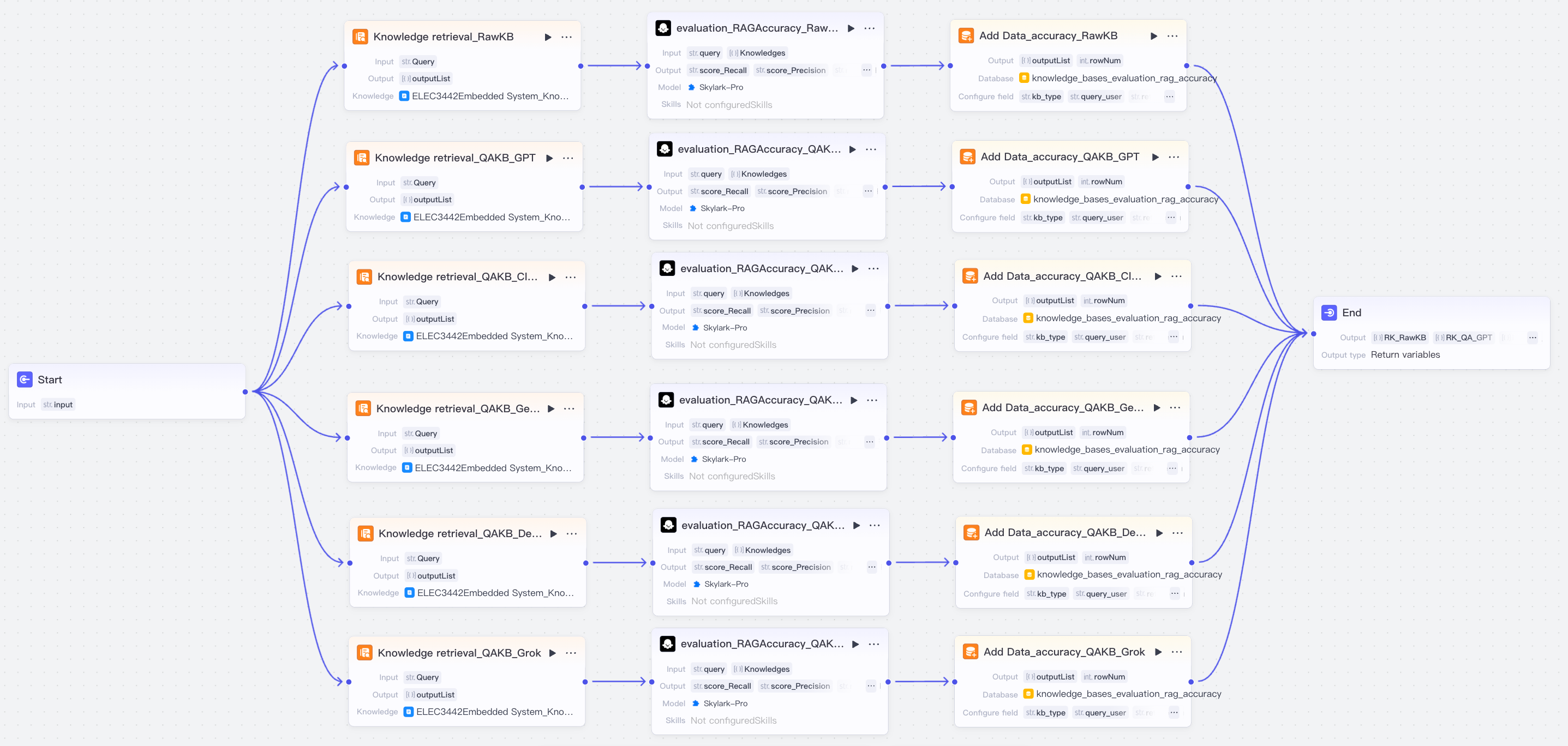

- Enhance RAG system retrieval accuracy for educational applications

- Validate methodology effectiveness in real educational scenarios

研究背景与目标

现有挑战

- 传统RAG系统存在文档格式异构、噪声干扰等问题

- 分割策略不当导致检索精度低下

- 在课程专有知识场景中幻觉问题严重

- 处理教育专有内容时效果有限

研究目标

- 构建高质量的课程专用知识库

- 通过多模型协同知识蒸馏生成结构化问答知识库

- 提升RAG系统在教育应用中的检索准确性

- 验证方法在实际教育场景中的有效性